Networking for backend engineers: OSI model

Understanding the basics of networking through the OSI model, and what happens when you send an HTTP request.

Layer 4 vs layer 7 load balancer? TCP vs UDP? Http 1, 2 or 3?! Okay, what are you even saying? Yep. Networking is what we're talking about.

As a backend engineer, it helps to understand what happens under the hood when you're building that beautiful API. As I've mostly worked in small fully-functional teams, I've had to deal with networking myself but never have been too clear on what's really happening. You might say "ah, but we're in the cloud! Networking configures itself", and while that is mostly true, knowing the basics of networking can help you build more performant and robust architectures. To that end, I've been learning more about networking and I'd like to take you along that journey with me.

In this post, we'll look at the OSI model and dive into how we can understand the networking stack using this model.

What is the OSI Model?

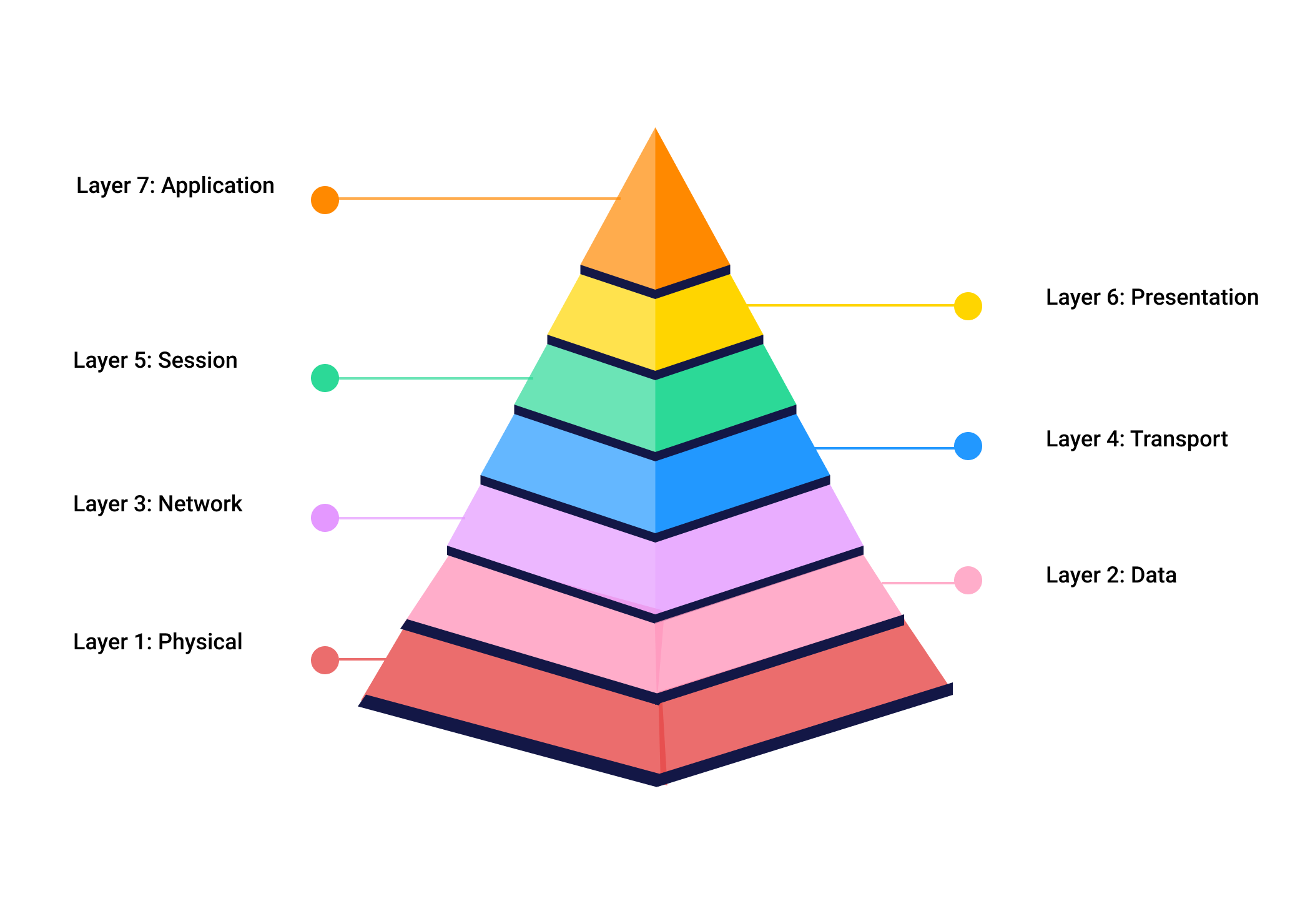

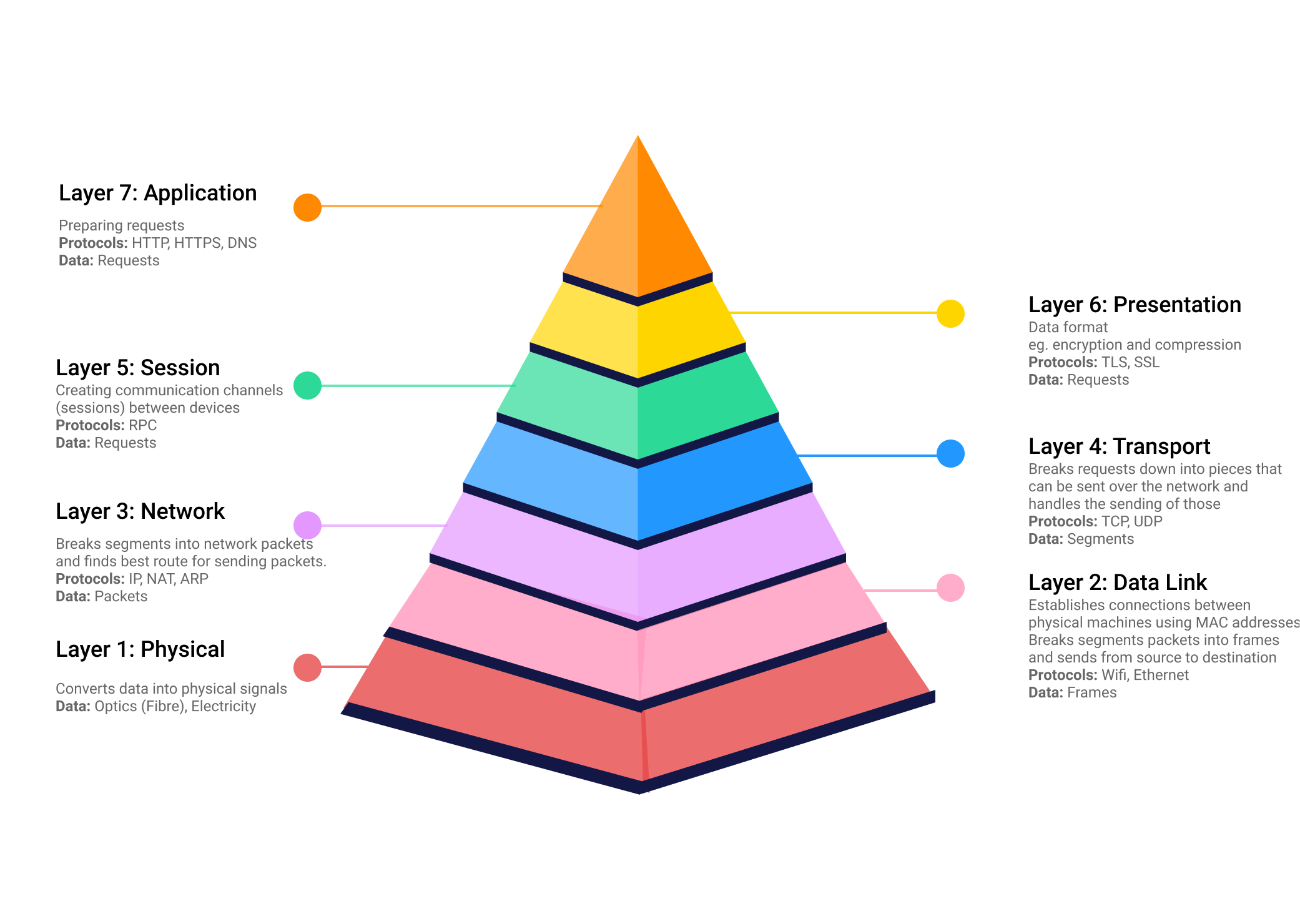

OSI stands for Open Systems Interconnect and it's a networking model that is used to define how computers can inter-communicate. The model goes from layer 1, the Physical layer to layer 7, the Application layer, becoming increasingly more abstract. The Application layer, is the one which engineers will be the most familiar with, as this is where HTTP protocols live.

What are the layers in the OSI Model?

You'll often hear terms like layer 7 load balancer or layer 4 reverse proxy. Those indicate what layer the technology is operating at and subsequently influence the kinds of features the technology has.

Each layer has protocols associated with it, for example at layer 4 (Transport), the two main protocols are TCP and UDP. As with all things, each protocol fits a specific use case.

Knowing the difference between TCP and UDP helps understand higher-level protocols like HTTP which is built on top of TCP. That then gives you insights when you're trying to troubleshoot a networking issue or you're doing performance optimization. For example, I've always known that you should locate your servers closest to your users and that smaller payloads are better but never truly understood why until I learned about how TCP breaks the data into Segments and each segment must be acknowledged by the receiver. I'd thought one request would equal one round-trip but that's only if a segment isn't lost and doesn't need re-transmission.

The way data is represented at each layer also differs and is referred to by a different name, called a Protocol Data Unit (PDU). For example, at Layer 4, data is referred to as segments, at Layer 3, it's referred to as packets.

Additionally, networking devices are usually associated with a particular layer. For example, switches can be Layer 2 or Layer 3, and therefore have different capabilities as Layer 3 switches can make decisions about packets that contain IP addresses and ports and not just frames that only contain MAC addresses.

Breaking an HTTP request down

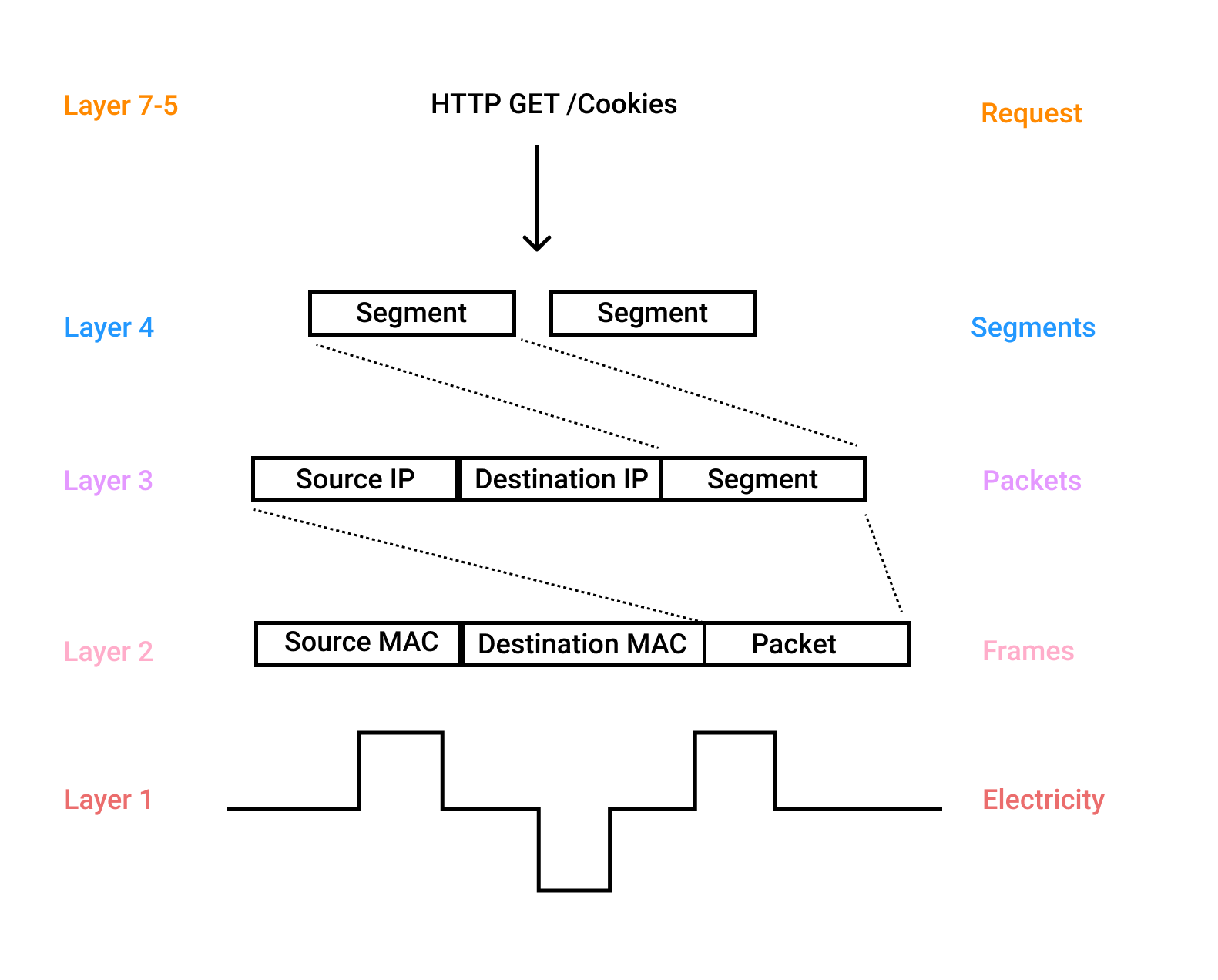

We can apply the OSI model to an HTTP request and get a better sense of how a request is represented at each layer. I have intentionally left out finer details like headers and checksums so we can focus on the core elements.

At layers 7 to 5, we start with a Request. This is your GET request and here we've requested cookies, because when do you not want cookies?

Aside: The OSI model doesn't do the best job of clarifying exactly what the distinction is between layers 7, 6 and 5, which is why some people prefer the TCP/IP model as it collapses layer 7 to layer 5 into one layer called the Application layer.

At layer 4 the request is broken into one or multiple segments, this is the responsibility of a protocol like TCP. In TCP each segment has a sequence number and needs to be acknowledged by the receiver, thus allowing TCP to guarantee transmission. As I said earlier, this is one point where it's clear to see that the smaller your request size and the closer your communicating devices, the better, as each segment will need to be transmitted over the network.

At layer 3, each segment is broken into multiple packets and at this point, the IP addresses are attached, which define what computer on the network (yours or the internet) these packets are destined for.

At layer 2, frames are composed and the IP addresses are mapped to MAC addresses using a protocol like Address Resolution Protocol (ARP). MAC addresses are ultimately what networking devices speak, as each MAC address is globally unique.

At layer 1, we then convert the frames into a source medium like electricity, radio or light, in the case of fibre.

Your HTTP request has now gone through all the layers and is transmitted across the network. At the receiving end, the request will then be recomposed by going up the layers until your HTTP request is rebuilt on the server-side.

Networking components will rebuild your request to different layers in the OSI model. For example, a layer 7 load balancer that routes based on information in the HTTP request, like a request path, will need to completely rebuild your request. Whereas a layer 4 load balancer will only rebuild your request to layer 4 at which point it can make a routing decision based on the IP address and port.

Time to network

If you're focused on backend engineering, you might not enjoy networking but understanding the basics of the networking stack and how your request is broken down, will help you design more robust and performant architectures.

If you've enjoyed reading this, reach out to me on Twitter @javaad_patel with your thoughts or subscribe and read along as I go down this networking journey.