Networking for backend engineers: TCP

Understanding the TCP protocol: How data is transferred reliably between computers.

Related networking articles:

* Networking for backend engineers: OSI Model

If you have ever wondered how clients such as mobile apps and websites send information to servers, then this article will help. In a previous article, I discussed networking at a broader level, specifically discussing the OSI model and the layers in a network. In this article, we'll take a deeper look into the most prevalent protocol used for communicating between two computers, the TCP protocol.

TCP is a Layer 4 (Transport Layer) protocol that dominates the internet, mainly because of its use in the HTTP protocols, specifically 1, 1.1, and 2 (HTTP 3 uses QUIC, a modified protocol built on top of UDP).

TCP is a stateful protocol, allowing two devices to communicate reliably. It guarantees that data arrives in order, without any gaps, duplications or corruption. It ensures data ordering using segment numbering and acknowledgements, and it ensures integrity using checksums. Additionally, it protects network stability by implementing two mechanisms, namely flow control and congestion control, to avoid flooding the underlying network.

In this article, we'll discuss the TCP lifecycle, characteristics and mechanisms that allow it to provide reliable communication.

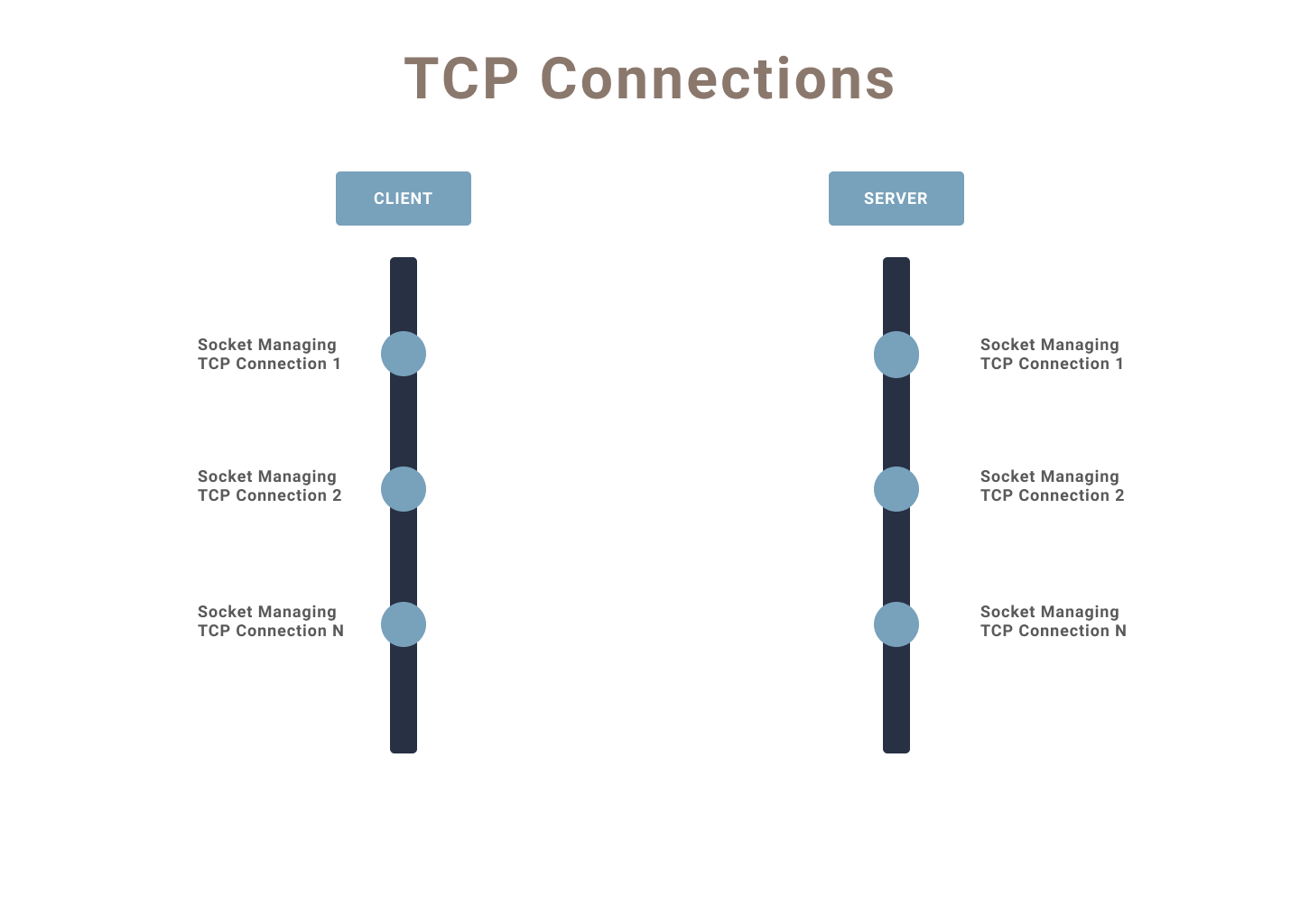

Connection management

Before any data can be sent between two computers, a new TCP connection needs to be opened. The TCP connection is managed on each side, sender and receiver, by the underlying operating systems through a socket.

Since a socket is bound to an IP address and port number, there is a theoretical limit on the number of sockets per IP of 65536 (2^16) and therefore a limit on the number of TCP connections. In practical terms, the limit is lower as each socket requires memory for management and some port ranges are reserved by the system. If you have ever encountered the dreaded socket exhaustion stack trace, this is why.

As TCP is stateful, the connection can be in several states, the main one's being:

- Opening state - where the connection is being created

- Established state - where the connection is open and transferring data

- Closed state - where the connection is in the process of closing

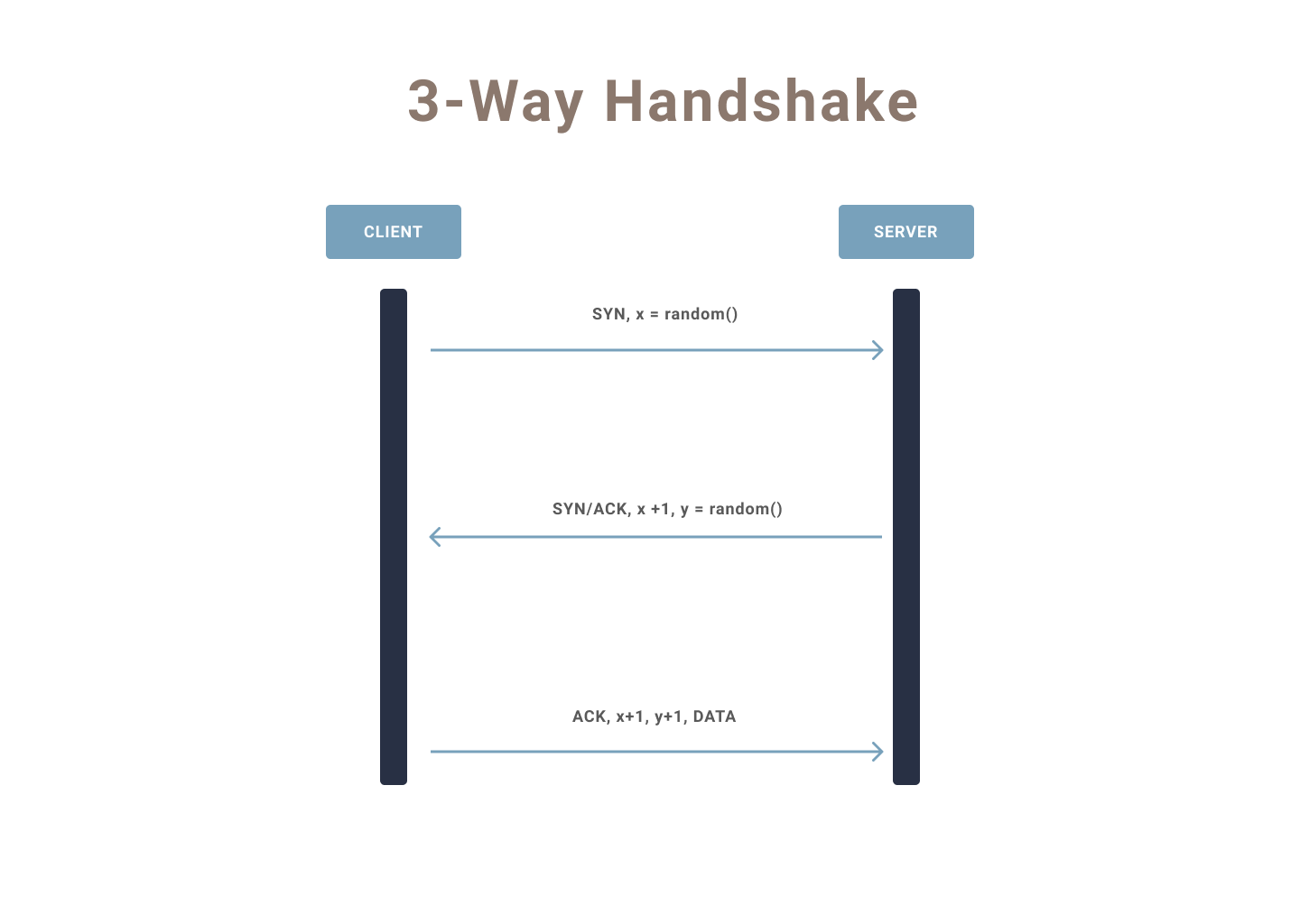

3-Way handshake

Establishing a TCP connection requires an interaction between the client and server, known as the 3-way handshake.

The process of creating a connection is shown in the sequence diagram below. The first step is for the client initiating the connection to pick a random sequence number, x, and send a SYN segment to the server. In TCP each segment must have a unique number assigned to it. The number is chosen at random to prevent replay attacks, a network attack where a previous request is sent to the server again to cause an error or exploit a vulnerability, eg. replaying a bank transfer to an account so that the transfer is made twice. The random number sent with the SYN will be used as the starting number for the first segment.

A segment is the Protocol Data Unit (PDU) of the TCP protocol. A byte stream (your data) would be broken up into multiple segments, these are the units TCP works with and transmits over the network.

After this, the server will increment x and choose its own random sequence number, y and respond with a SYN/ACK segment. The ACK is an acknowledgement of the client's SYN.

Finally, the client responds with an ACK, acknowledging that it received the server's SYN. To avoid having to do another round-trip, the client will also send data along with the ACK.

At this point a connection is established on both the client and the server, each knowing the starting sequence number of the other. This allows the data to be delivered in order and without any holes.

This 3-way handshake is time-consuming and is the reason in HTTP 1.1, the Keep-Alive header was introduced, allowing the connection to remain open for as long as required/allowed. The round-trip required to open a connection is another reason why its beneficial to keep your client and server geographically close.

A sequence number is a 32-bit number and since for each segment the sequence number is incremented. Eventually, the sequence number will wrap around, a concept which you can learn more about here.

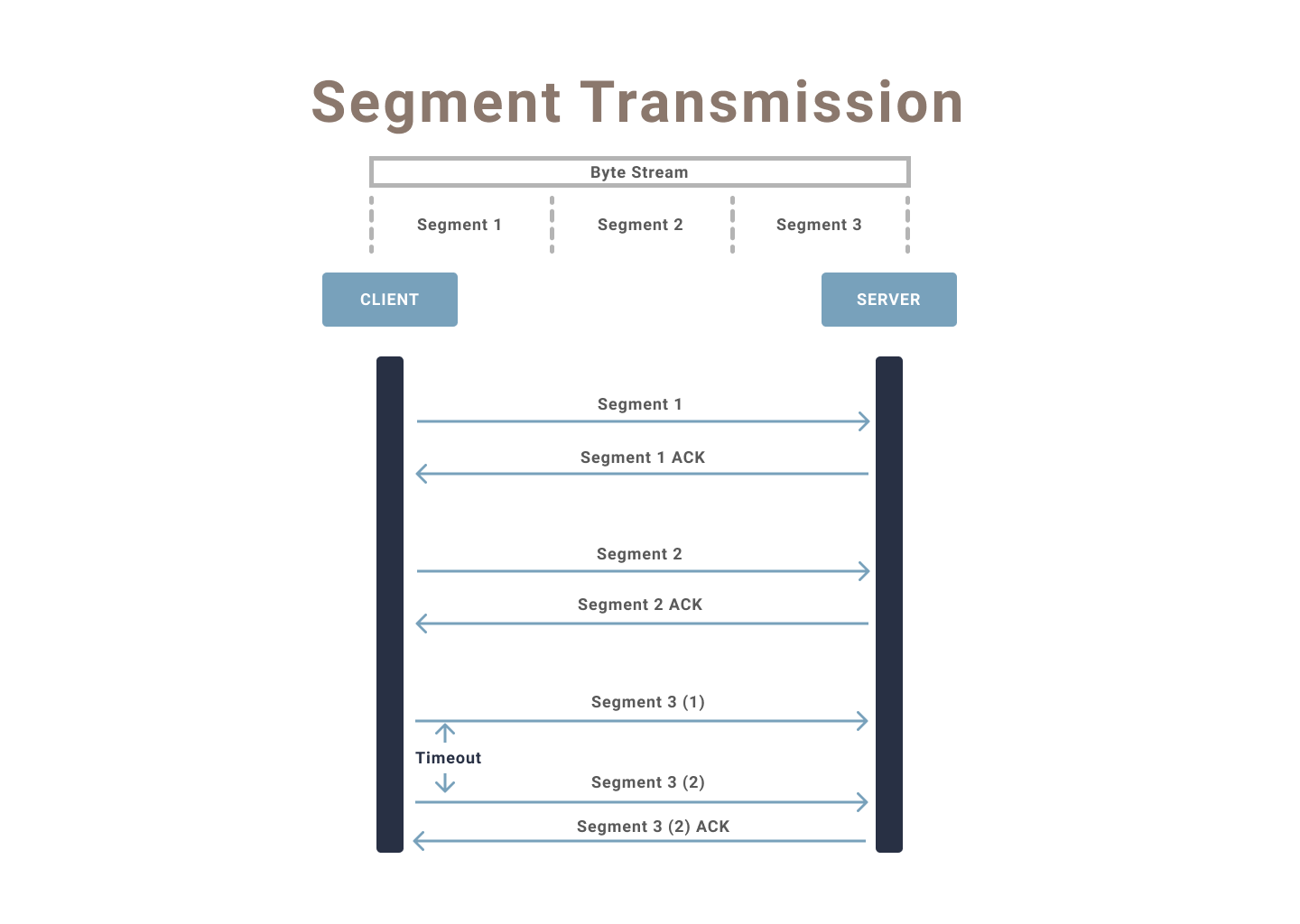

Transmitting data between client and server

TCP breaks the data to be transmitted into multiple segments, each of which is assigned a unique sequence number (discussed above).

For every segment that the client sends, the server must respond with an ACK, acknowledging that it received the segment. If an ACK is not received for a particular segment within the specified timeout, the client re-transmits the segment.

This behavior allows TCP to guarantee that given a stable network the segments will eventually be transmitted.

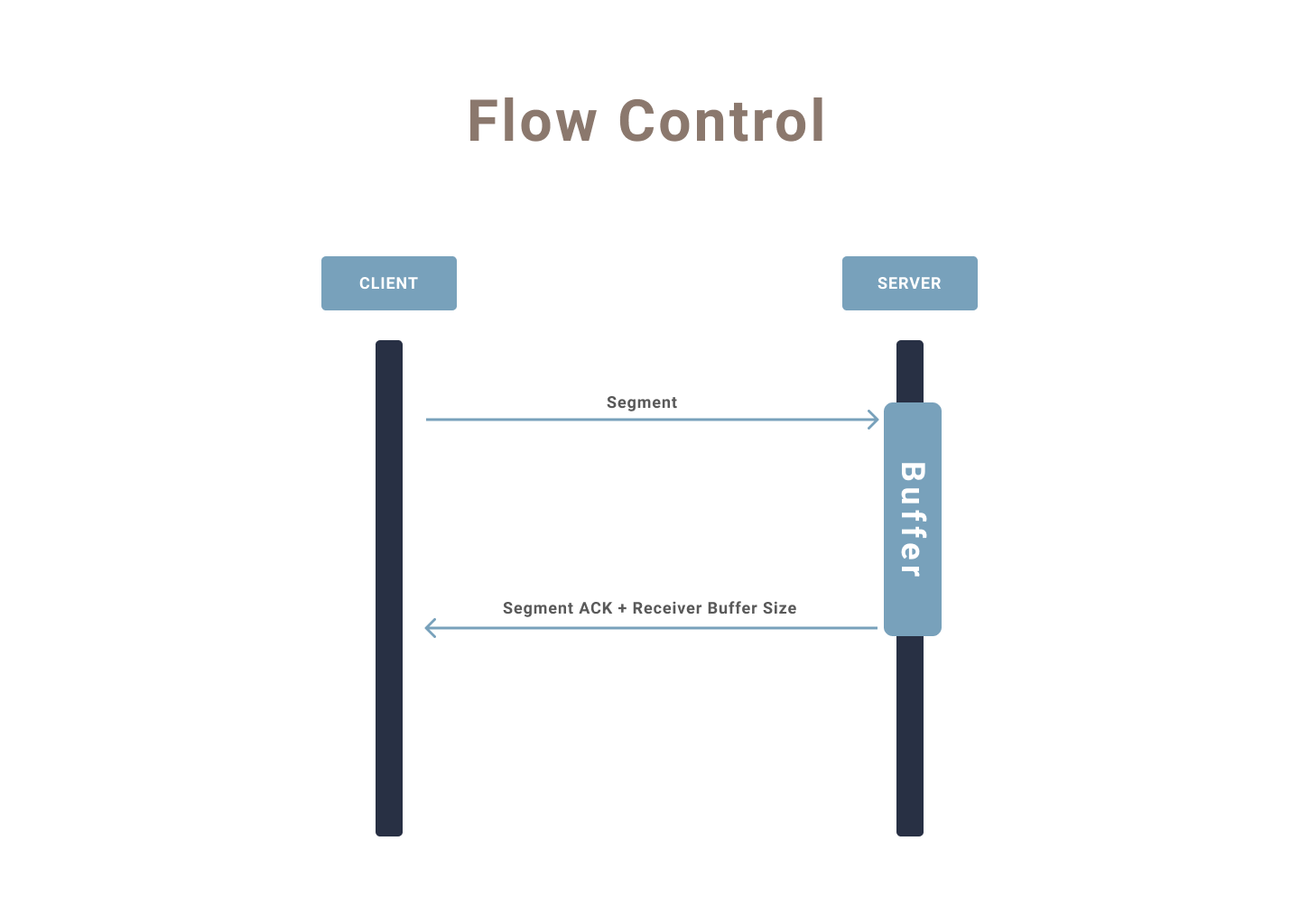

Flow control

To avoid overwhelming the server with excessive data transfer, TCP implements a mechanism called Flow Control. Flow control is akin to rate limiting but on a per connection basis.

The server stores the incoming TCP segments, that have not yet been processed by the operating system, in a buffer. It then communicates the size of the buffer with every acknowledgement.

This allows the client to slow data transmission when it detects that the server's buffer is filling up.

Congestion Control

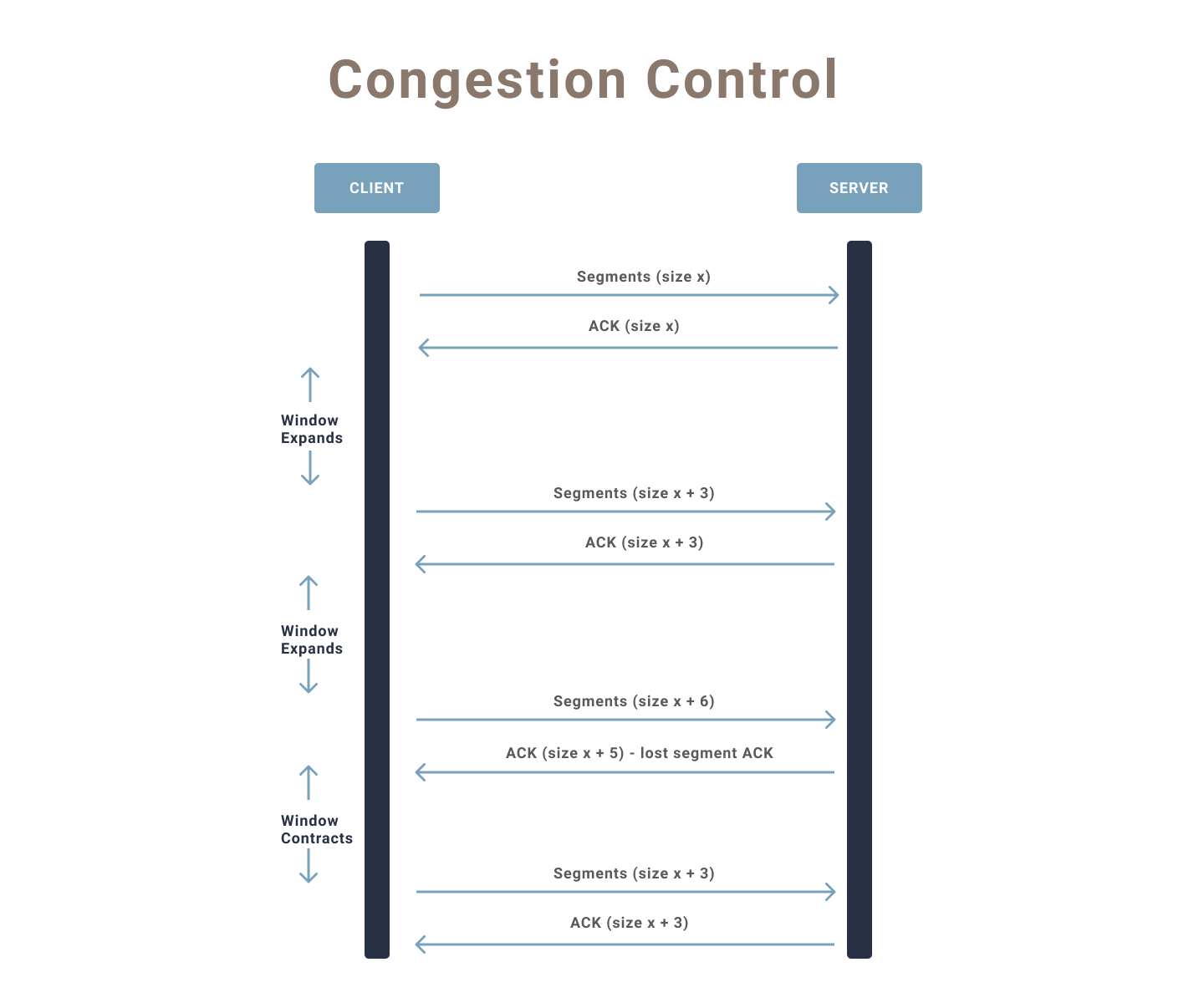

If flow control was to protect the individual server from being overwhelmed, congestion control is the mechanism TCP uses to avoid overwhelming the underlying network.

The client maintains a congestion window, which represents the total number of segments that can be sent to the server without receiving an acknowledgement, ie. the total number of in-flight segments.

When a new TCP connection is established, the size of this window starts at a system default. As segments are acknowledged the size of this window expands exponentially, effectively increasing the number of in-flight segments, this happens until an upper limit is reached. If an acknowledgment is not received within the timeout period, a congestion avoidance mechanism kicks in which decreases the congestion window size to avoid overwhelming the network.

The behavior of a TCP connection to start with a system-defined congestion window, usually much lower than the actual allowed rate, is referred to as TCP cold-start. It is another reason why TCP connections are re-used to optimize the speed of data transfer between client and server.

Summarizing TCP characteristics

TCP is an amazing protocol and is still ubiquitous with the internet as most of the internet still relies on it. This was a very brief look at TCP and there's much more to learn but as a backend engineer even knowing these characteristics and mechanisms of TCP will allow you to understand what is happening at a lower level when you're debugging API issues.

If you've enjoyed reading this, reach out to me on Twitter @javaad_patel with your thoughts or subscribe and read along as I go down this networking journey.